Last week I was at the colo – replacing some faulty disks; fixing a Hyper-V problem and I had chance to do my first ever RHEV 6.4 install. This was really second attempt as previously I’d foolishly install the RHEL Server, and then enable “Virtualization Host” a feature – when what I’d actually intended to do was install RHEV – a dedicated version of their hypervisor. This all part of the process of me getting out of my VMware Bubble, and exposing myself (in a non-sexual way) to rival offerings, in away to better appreciate what VMware is doing – but its also because technologies such as VMware vCloud Automation Center recognise and use these other virtualization environments. I guess my confusion over what to install is compounded by the fact there are couple of options to enabling virtualization in these sorts of environments

Last week I was at the colo – replacing some faulty disks; fixing a Hyper-V problem and I had chance to do my first ever RHEV 6.4 install. This was really second attempt as previously I’d foolishly install the RHEL Server, and then enable “Virtualization Host” a feature – when what I’d actually intended to do was install RHEV – a dedicated version of their hypervisor. This all part of the process of me getting out of my VMware Bubble, and exposing myself (in a non-sexual way) to rival offerings, in away to better appreciate what VMware is doing – but its also because technologies such as VMware vCloud Automation Center recognise and use these other virtualization environments. I guess my confusion over what to install is compounded by the fact there are couple of options to enabling virtualization in these sorts of environments

- Standard RHEL install with “Virtualization Host”

- RHEV which is an appliance like version of the above

- RHEL with KVM enabled, suitable for support with OpenStack

I found the installation of RHEV to be blisteringly quick. I mean quicker to install than even ESXi. Perhaps the only qualification to that is post-configuration process required to make it manageable. As with all appliance like hypervisors there’s a console ‘shell’ that needs to be used to do task such as applying a hostname or setting IP addresses.

After booting to DVD and the usual preamble – the first thing your confronted with is which disk to install RHEV to:

The rather odd thing about this is how RHEV identified my local IBM ServerRAID controller inside my Lenovo TS200 as a “FibreChannel”. That was rather funny, given how these boxes have no fibre-channel connectivity. But looking at this interface again – I think Local / FibreChannel is meant to indicate EITHER Local or FibreChannel connected block storage.

One thing I was surprised to see with this “appliance” model was being asked to setup the partition table data… It’s been some time since I’ve had to worry about partitioning, in fact I don’t think I’ve had to deal with this since the “Service Console” days of ESX “Classic” (as I like to call it)

After this you set a password, watched a status bar – and then blink and the install is complete. As said earlier one of the impressive things about RHEV is how quick it installs. After a reboot the next thing you will be asked to do is to login as “admin” (not root) which by default opens the console front-end to RHEV. To me its very much like the DCUI (Direct Console User Interface) that acts as the head on the server.

As with a lot of Linux installs, by default all the physical NIC interface are disabled and unconfigured by default. So after setting things like the hostname, DNS, and NTP – you need to select a physical NIC (eth0/eth1/eth3/eth4). One consistent thing I’ve seen across all the different hypervisors I’ve installed so far (ESX, Hyper-V and RHEV) is how the PCI bus on my Lenovo is enumerate in a skewed way.

So eth0 and eth3 are actually two NIC interfaces on the SAME dual-port card – whereas eth1/eth2 are the on the same dual port

It’s a relatively simple affair to select a device and configure it for management purposes.

One think I rather like is the option “Flash Lights to Identify” which sends a pulse to the physical NIC easy the process checking your selecting the right NIC. That’s something I would love to see the VMware DCUI do as well…

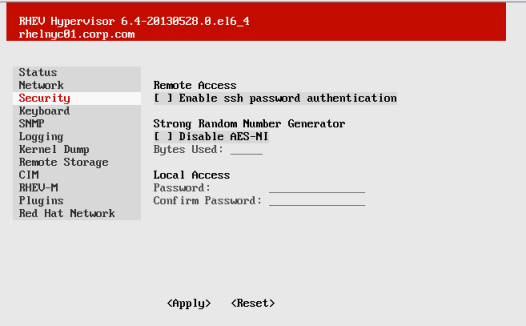

Finally, I enabled SSH on these boxes so I could run “admin” again, and gain access to this console again…

… I was pretty impressed with easy of install of RHEV, compared to say something like Windows Hyper-V. It’s much appliance/ESXi like – which is the way I think hypervisors should be. The only weirdness I saw with the RHEV console is when you go about switching between the menus. Sometimes the entire screen goes red or as I call red text on a red background! I don’t know why this happens, it could be something odd about the onboard graphics card, and the screen I was using at colo. But needless to say I’ve not seen this happen with the VMware ESXI DCUI…