I spent the May Bank holiday in Melbourne, Derbyshire on a songwriting workshop with Edwina Hayes. Melbourne is a lovely little market town south of […]

CD Launch Party

So last Saturday (27th, May) was my launch party at the lovely Coach House Studios in Wirksworth. I think it was a great success. There […]

DEMO: The Reckoning

This is my entry for the “Talent is Timeless” monthly competition on “setting sail”. I’d have to say the idea of writing a song within […]

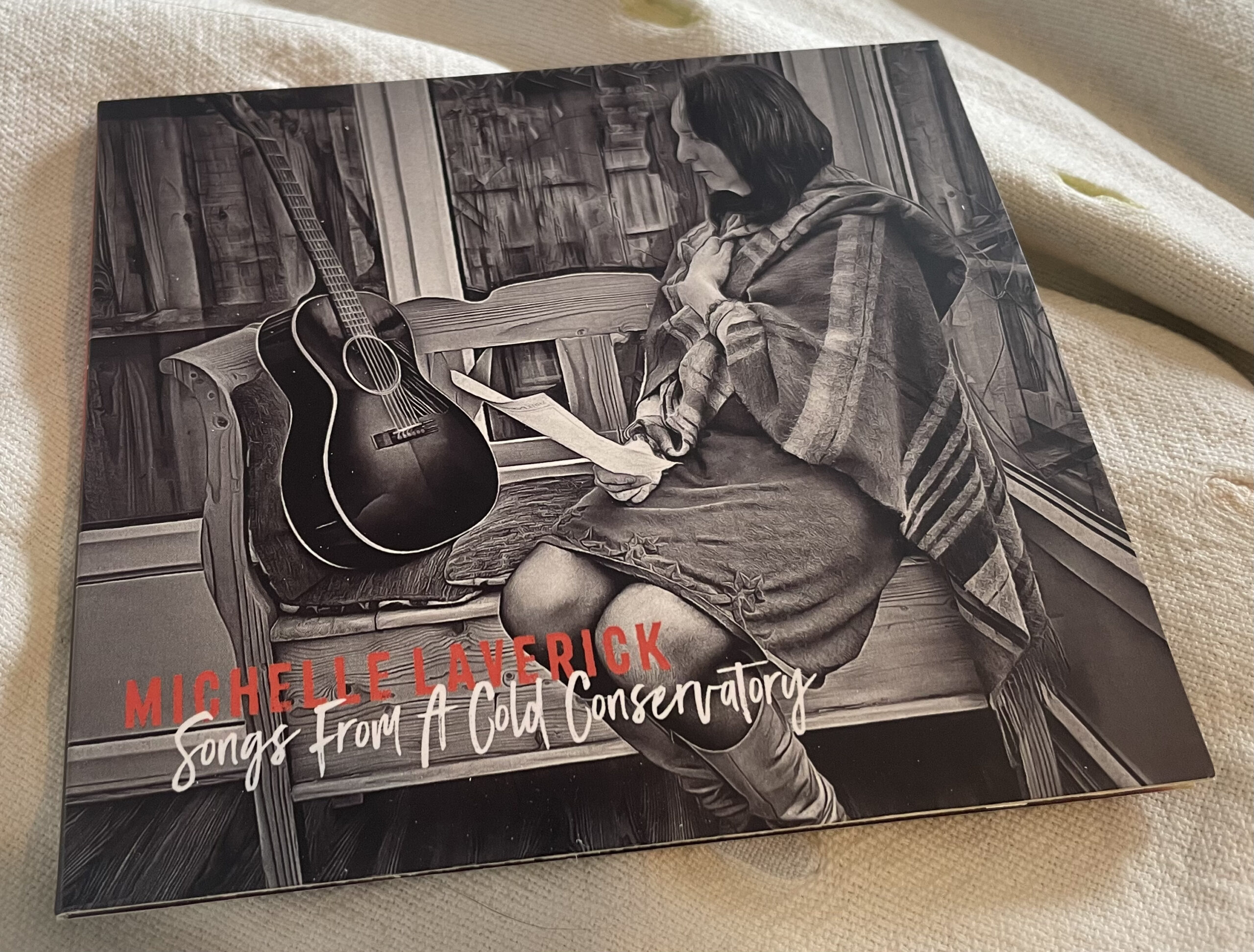

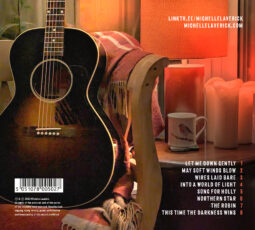

Debut Album Released: Songs from a Cold Conservatory

Songs From A Cold Conservatory by Michelle Laverick I’m delighted to announce that my debut album is available today. I spent last weekend fulfilling the […]

Progress Report: Debut Album Artwork

So it’s been a while since I blogged about the debut album. The main news is the CD is now at the pressing plant. I […]

DEMO: Woman on a wire

So this is a song that improvised on my iPhone back in October 2023. I had hoped to sneak it onto my debut album, but […]

DEMO: Bring Her Home (Song for Brianna Ghey)

So this is a demo of a new song called “Bring Her Home” – a very rough cut just from my spare bedroom studio. The […]

Talent Is Timeless

So I’ve entered a songwriting competition – my first! I’m using the last song from my soon-to-be-released debut album called “Songs from a cold conservatory”. […]

Debut Album: Updates, Launch and Merch

I can’t believe it’s been Nov 2022 since I last published anything here. I guess I’ve been focusing on my recovery (I recently had an […]

Debut Album: Songs from a cold conservatory

I’m currently working on my debut album. I’m recording at Cobbler Cottage Studios in Crich, Derbyshire. The working title is “Songs from a Cold Conservatory” […]