In my previous post I looked at load-balancing a simple web-server setup with the vCNS Edge Gateway. This time I’m looking at something a little bit more involved – my own VMware View environment that I now use to get remote access to my colo in the UK. What’s great about the Edge Gateway in this respect is it doing all the work my previous environment used to do – Firewall/NAT and now load-balancing whereas previously I need multiple devices to do this work, I can do it all in software from a click of the mouse.

As you might know I made the switch to using VMware View about 2-3 months ago – a switch that was long overdue. During the course of setting this up I worked pretty closely with my colleagues to learn what’s supported and how this configuration works. That’s worth stating upfront before I dive in. View and the Edge Gateway’s load-balancing do indeed work together – you can enable load-balancing on the array of Security Servers that are in turn coupled to the Connection Server. Once a Security Server has been selected then PCoIP session is established directly to it – and to it alone. That’s to avoid a situation were 443 session is established to one Security Server (say SS01), but through the act of load-balancing a PCoIP 4172 session is established to a different Security Server (say SS02). It’s worth mentioning that the load-balancer on the vCNS Edge Gateway supports TCP sessions only, and PCoIP requires TCP/UDP. This rather convoluted explanation does result in the same outcome – because the Security Server connection on 443 is load-balanced, the resultant 4172 is distributed across the View Infrastructure – that’s achieved by making sure the Security Servers return their own unique IP address for the Internet…

To explain the relationship/process I decided to do an educreations.com whiteboard session:

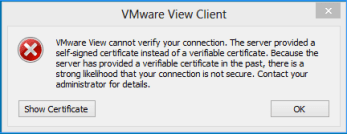

Personally, I would think its worth sorting out the certificates on the View Security/Connection Servers before embarking on this configuration. Else you can get some “certificate” strangeness as clients connect to different Security Servers during the load-balancing. It can occasionally look like some sort of man-in-the-middle attack:

You know you have valid and trusted certificates by checking the “System Health” of the View environment. Little Green boxes indicate the Connection and Security Servers are valid and operational. I reused my certificates that I generated when I was writing the eucbook.com last year with Barry Coombs.

Setting up the Security & Connection Servers

Setting up more than one Connection Server/Security Server is I think a relatively trivial affair. One of the big selling points of the VMware View product is how easy it is to install and setup when compared to legacy systems that have been on the block for sometime, and weren’t original designed for virtual desktop. However, its worth just pointing out that Security Server in this context, and its paired Connection Server are configured slightly differently – from the configuration I worked on in last years book.

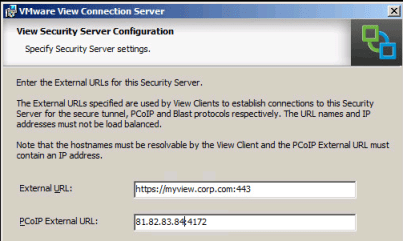

At the end of the installation of the View Security Server you’ll need to specify the external DNS and external IP address used by the View Clients (across the Internet) to get to View environment.

The “External URL” value remains the same – pointing to DNS name resolvable to the Internet, and fundamentally pointing at the load-balancers “virtual server” IP address – in my case this is going to be 172.168.5.99. What’s different is the PCoIP External URL (or IP address). So my first Security Server will use 81.82.83.84 and my second Security Server will use 81.82.83.85. In the eucbook.com configuration BOTH Security Servers SHARED the SAME external IP address, here the have an IP of their own.

The common mistake here (generally even without us the vCNS Edge Gateway for load-balancing) is forgetting that although this configures the Security Server, the Connection Server to which it will be paired – needs its configuration updating as well. Under the >>View Configuration, >>Servers, and “Connection Servers” tab, the affected Connection Server needs to be told what the external URL and IP address is and that is to be used in a “Secure Gateway” mode.

So my first Connection Server is configured to use the 81.82.83.84 address:

Whereas my second Connection Server is configured to the 81.82.83.85 address:

VERY USEFUL TIP: You might perhaps want to test the Security Servers to confirm connection through them can be made. During the “testing phase” you might find handy to temporarily set the “External URL” for the HTTP(s) Tunnel to be SAME IP address as the PCoIP External URL for the Secure Gateway. That’s because the External URL myview.corp.com might be registered to another IP address (81.82.83.86 for instance) which is yet to be configured. By making both fields contain the SAME IP address you guarantee your not connecting to any other secondary system.

The interesting thing about this test is both Security Servers can (and in my view should) be made available via DNAT/Firewall rule and tested independently – first by connecting via 81.82.83.84 and then by 81.82.83.85. In this case the reference to 443 is merely there to facilitating if a direct connection to each of the Security Servers works properly. These rules are largely temporary – because later on in the configuration this will be switched to being resolved to the load-balanced “virtual server” address.

Note: So here 192.168.3.10 and 192.168.3.11 are used on the external interfaces of my Edge Gateway – the first points to 172.168.5.21 (corphqSS01) and the second 192.168.3.11 points to 172.168.5.22 (corphqSS02).

I also updated my firewall configuration to make sure both Security Servers were accessible. Again, the reference to 443 is temporary one for testing purposes only. During the test I just typed in raw public IP address into the View Client like so:

Admittedly, my firewall configuration is bit unusual. I do have a Juniper Firewall at the colocation but I’ve asked my colocation provider to take two of my IP address (81.82.83.84/81.82.83.85) and make the rule ANY:ANY to 192.168.3.10/192.168.3.11. The effect is traffic coming in 81.82.83.84/85 is redirected to the external interfaces of my vCNS Edge Gateway. This makes the Edge Gateway act as my firewall in my lab environment. So the flow of traffic is like this 81.82.83.84 >> 192.168.3.10 >> 172.168.5.21 and 81.82.83.85 >> 192.168.3.11 >> 172.168.5.22. I imagine in a real multi-tenant environment things could be made much simpler by granting each tenant a block of 81.82.83.x address on the External Network within vCloud Director. However, for me this mapping was easier to request and required fewer changes to get up and running.

Enabling Load-Balancing for the Security Servers

Having confirmed that all four View Servers (corphqCS01, corphqSS01, corphqCS02, corphqSS01) worked correctly, I was ready to give load-balancing my View environment across the Edge Gateway. I followed the configuration of the web-servers example, only this time I used 443 as the port number to connection.

It’s perhaps worth restating before we set to the terminology used. The Pool Servers is merely a grouping of nodes in a vApp – a collection of VMs if you like. These “members” are generally identically configured systems such as the Security Servers that make up a VMware View environment. When you define a pool – you give it a name, and then you select what TCP ports it will listen on – in our case that will be HTTPs/443 which is the default for the Security Servers. It’s also possible to configure a “Health Check” interval, this used to monitor the members in the pool to confirm if they are still active/functional. There are timeout values that maybe configured to say how long a member must unresponsive before its considered “offline” and unavailable. Adding in a member is achieved by typing in the IP address (external IP address valid for the Organization Network – if you using a vApp Network).

1. First I defined a pool for the members:

2. Next I enabled load-balancing using the least-connected method for port 443/https

Note: You’ll see I’m using “least connected” as the load-balancing type, as I felt that was perhaps a better way of checking how “loaded” the Security Server was…

3. Then I set the monitor port (also 443) for the Health Check

Health Check Settings Note:

With the HTTP Health Check, a HTTP “GET” method is used to detect server status, and responses 2xx and 3xx are valid whereas other responses (including a lack of response) are used to indicate indicate a server failure. URI used for HTTP GET requests can be specified in the text field. With the HTTPs Health Check, SSL connections are made to the servers using SSLv3 client hello messages. The server is considered valid only when the response contains server “hello” messages. Finally, with the TCP Health Check a message is sent using whatever TCP Port is used, and the system merely waits for a response.

The values after Health Check port settings control the various timeout and retry values that engage when a member of the pool becomes unresponsive. The “Interval” controls how frequently a member is contacted in this case every 5 sec, the Health Check then waits 15sec referred to as the “Timeout” for a response to the Interval. If there isn’t a response the “Unhealth Threshold” tries 3 attempts before a server is declared dead. When the member come back online if the “Health Threshold” is gets 2 successful health checks responses from a member it is considered functional. As ever with these sort of settings – you need numbers that reasonable for slight outage (often referred to the trade as a “network jitter”), but values not too long that it takes forever for a member to be marked as down – otherwise you have clients being directed to system that is unavailable.

4. Then I added the members to the pool (172.168.5.21 and 172.168.5.22)

Note: The Ratio Weight can be used to temporarily take a member offline by setting the value to be 0. It’s perhaps worth checking the status of the pool

Now the “pool” was created the next step is to add the “virtual server“. That might sound a little bit odd to the ears of folks in the virtualization world. But the term “virtual server” is often used in the world of network load-balancing to refer to the single IP address that’s used to represent the pool. Some network load-balancers refer to this as the “cluster IP address” as it’s the single IP that represents multiple members in a cluster or pool. If it makes you feel more comfortable you could call this a “shared alias IP address”. It’s the one that sits in front of my Security Servers (corphqSS01 and corphqSS02) intercepting HTTPs/443 request and relaying that request on to one of the Security Servers in the pool – what we would call a “member“.

5. The next step was to switch to the “Virtual Servers” tab – set the IP used for inbound load-balancing on 443 to the pool. This the “shared” IP that will actually be used by the initial connection.

Note: The typical mistake people do here is selecting the wrong pool under “Pool”. If you have multiple pools you must select the right pool for the load-balancing to take place. Sounds numb-nut obvious but in my haste I have occasionally selected the wrong pool.

Reconfigure DNAT and Firewall Rules

Next all I had to do was update my DNAT/Firewall rules to make sure that HTTPs/443 was directed to the load-balancing node rather than to the Security Servers directly:

Note: Here I’m reusing 192.168.3.10 for 443 load-balancing. That works fine because when 443 requests come into 192.168.3.10, they are directed to the load-balancing node (172.168.5.99). However, if SS01 by the load-balancer is selected then 192.168.3.10 will redirect traffic directly to SS01 on 172.168.5.21 using TPC/UDP ports on 4172. That means the public IP address that backs 192.168.3.10 (81.82.83.84) is listed on the Public DNS in my case view.corp.com.

Testing

Of course, none of this matters a jot if you don’t get a load-distributed across multiple Security Servers. Before you continue you might want to update your Security Server and Connection Server configuration. If you remember I temporarily set the “External URL” to be their own public IP addresses. What I actually want to do is set these up to use URL which is publicly listed on DNS like so:

So established two connections from two independent devices to two different desktops – one Windows 7 and the other Windows XP. As you can see the vCNS Load-Balancer distributed the load across both Security Servers.