I recently got interested in the whole concept of nesting. Nesting is used in virtualization to describe a situation where you run a hypervisor under the context of another hypervisor. For example running VMware Fusion or VMware Workstation to run ESX in a VM, to build a “Virtual Lab” within which to learn more about VMware’s enterprize technologies. Of course a similar setup is possible with ESX, using Physical ESX (pESX) to run a Virtual ESX (vESX) for the same reason. Of course, with my new found interest in the vCloud Suite, my interesting building out a similar setup within vCloud Director. Such a configuration is not “new” as such – its formed the bedrock the Hands-On-Labs at the last couple of VMworld, and its also the foundation for what’s called vSEL – a “pod” based setup that allows the SE to quickly demonstrate the product range without having spin-out and maintain a lab. This sort of setup is new-to-me, and that’s what counts. So I’m interested in learning more about this is configured, perhaps even learning how to automate it.

My interest comes from another angle. I’ve got this idea that it would be really cool if one or more of our “vCloud Service Providers” were able to automate such a configuration to allow folks in the vCommunity to have their own “Virtual Lab in the Cloud”. So rather than people needing to build a megaPC along the lines of Simon Gallagher’s vTARDIS or have an Array-of-Inexpensive-PCs (AIPCs) with a physical OpenFiler/FreeNAS – you could use a pay-as-you-go model to a vCloud Service Provider instead. As for me I’m not sure if I will be keeping my Lab-in-a-Colo. It’s quite a pricey undertaking…

So anyway, after my vINCEPTION post I started experimenting with running vESX and vCenter in vApp on my lab kit. If you remember I imagined the physical layer/vSphere layer as vINCEPTION Level0. The vApp running vESX and vCenter (and perhaps other components like vCloud Director itself) as vINCEPTION Level1 – with any vApps created in this layer as vINCEPTION Level2. One of my anxieties with vINCEPTION1 is the disk IOPS generated with running vINCEPTION2 guest on top. Most homelabs get round this by using SSD to reduce the IOPS tax. SSD is not something I have in my lab. There’s really two options with vESX in terms of storage – you either give the vESX direct access to the physical storage layer or run some kind of virtual storage appliance in the same context/layer of vINCEPTION as the vESX host. There are security concerns associated with the first method as it could be regarded as breaching multi-tenancy rules BUT, one of common usage cases for having a direct-connected (non-routed) connection from the Guest Operating System in a vApp is when you want to enable say an iSCSI Initiator in a VM direct to the storage layer. What’s a bit “unusual” in my case, is I’m offer that native storage access to the vESX. Why? Well, I figure the taking enterprize class storage like my NetApps or my Equalogics which are VAAI/VASA capable would significantly reduce the overhead in nested ESX configuration. The down side of this approach is the ease of deploying it if I was a vCloud Service Provider – where I might want to deploy many “Home Lab in the Cloud” instance for all my subscribers or “tenant” if you want to use that word. I know in our HoL and vSEL environments this second approach is favoured as it helps in the build-up and teardown process. Because I’m curious kind of fellow (in all senses of the word) I’m actually going to do both – as I want to know how it is done and share it with you guys – but also because I’ve been looking for reason to play with the newly minted NetApp VSA, as well as our own VSA and vSAN or “Distributed Storage” projects. This sort of step isn’t new me personally. The first VSA I used was the Lefthand VSA to write the first SRM book. Back then all I had was a dumb storage box with the VSA on top. I guess nowadays we would call that “Software-defined Storage”. I’m joking. I was being visionary back then, just I so little storage resources necessity is the mother of invention. Anyway, less blathering on. Lets get a configuring.

Before I begin I want to make sure my vESX systems will have access out through the pESX and directly on the physical storage. Two achieve this I need to do a couple of configuration changes. Firstly, I need vSwitch portgroup with the correct VLAN (if neccessary) configuration to get to the storage – this will be later utilized by vCloud Director to connect to it (non-routed i.e not using a vCNS Edge Gateway” at all). The path of networking is OrgNetwork to ExternalNetwork to StorageNetwork…

So first I need to create a portgroup manually on my “Infrastructure DvSwitch” to allow the access. The vmnic backing this network a physically patched into a different physical switch (My Dell PowerConnect kindly donated by the Dell Equallogics Team in Nashua, New Hampshire). Normally, the VMs in my vApps have no access to this DvSwitch at all.

So here the portgroup called “ExternalNetworkStorage” will be backing the “External Network” defined in vCloud Director. In my case all my “infrastructure” traffic is running on VLAN0. That’s not “best practise” as you generally would have each type of traffic VLAN’d out. In my case the “Virtual Datacenter DvSwitch” is where I’ve got my VLAN implementation so I can use VLAN-backed pools for my tenants.

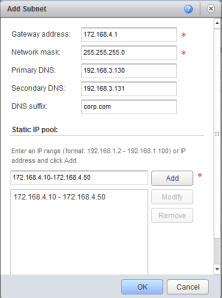

When you create this external network you will be required by vCD to create a “Static Pool”. Normally, this static pool is used to IP either VMs in a vApp that are directly connected to the network (such a configuration breaks the “multi-tenancy” rule) or to IP a Edge Gateway device that securely connects an Organization Network to the External Network. Now, vCD doesn’t support vESX as operating system, and therefore cannot do the “customization” that would normally configures an IP address for the GoS NIC. In vESX (as in pESX) you have to create a VMkernel Port to access the pStorage layer. So it’s really up to you want you want to do here. Configure a tiny scope, or create a reasonable sized one for us for ordinary vApps.

Next I need an Organization Network in the Organization Virtual Datacenter where my vSphere vApp will reside. In this case I want a “direct-connection” so my vESX hosts demands on the storage layer are not driven though the NAT/Firewall of the vCNS Edge Gateway… So in this case I created an Organization Network “connected directly to an External Network”.

Next I upload the ESX5.1 CD and the vCenter Server Virtual Appliance. The VCVA is available in a .OVF and .OVA format. Ideally, you should use the .OVF version because it uploads without any special work. If you have download the .OVA, remember the .OVA format is just a generic tarball. So any half decent unzip tool should be able extract that for you – leaving you with the .OVF and .VMDK files. Remember .OVA files are not natively supported in vCloud Director 5.1.

The VCVA can be deployed just like any other appliance, and in my experience you best just deploying it with DHCP settings, and then re-IP and re-naming (if needs be) like any other appliance. Again, the static IP pool and guest customizations does not work with the VCVA. The same goes for the vESX. so one tip I can give is make sure you have the vCNS Edge Gateway on the primary OrgNetwork as that helps with basic connectivity during the post configuration stage.

Start of creating an empty vApp. I’ve found starting off with the “Build New vApp” option rather than the “Add vApp from Catalog” a little bit easier if you want to start off with defining the ESX hosts first. If you want to start of with building the VCVA instead then “Add vApp from Catalog” is easier, because you can add in the VCVA recently upload to the catalog. It’s really up to you. It doesn’t really matter. But I’m using “Build New vApp” because I want to define my ESX hosts first…

Anyway, this post is getting a bit long in the tooth. Time break this up into a different post….