Welcome to the Helm Winds landing page. Here, you will find the digital booklet for anyone who purchased the CD or downloaded it digitally, as […]

Gigs in April

So I’ve got some dates coming up at the end of April. There’s a couple of paid gigs together with me rocking up at local […]

Early Responses to the new Helm Winds EP

Batches of the Helm Winds EP in a CD format have gone out to various outlets across the country – and I’ve started to collect […]

New Year: New Single: New Video – The Helm Winds

Happy New Year to everyone. I’m pleased to release the title track from my soon-to-be-released EP. The video was very kindly created by Marry Waterson. […]

BBC Introducing – Christmas Parting Song

I’m very pleased to say once again the BBC have picked up a song of mine. It was released on 1st Dec, and see it […]

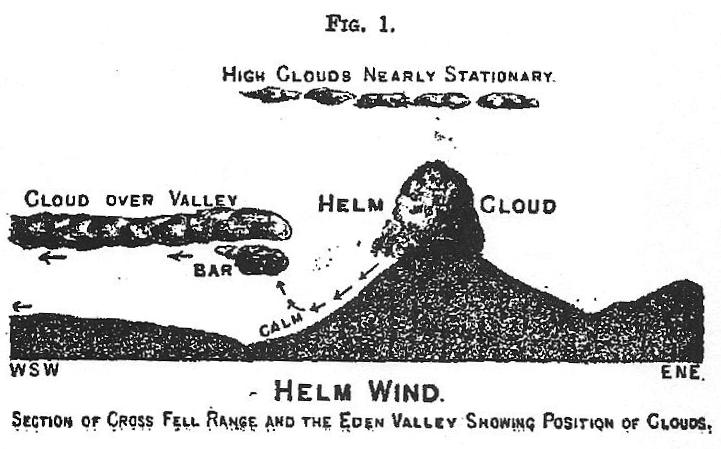

Coming soon “The Helm Winds” Collection of Songs

So I have a new collection of songs recorded for your listening pleasure coming soon. I’ve decided not to call it an EP, LP, or […]

GIG: Supporting Johnny Campbell

TICKET: https://www.fatsoma.com/e/j0gnvj6h/johnny-campbell-michelle-laverick I’m pleased to say that my last gig of the year will be supporting Johnny Campbell. It will be a special gig as […]

GIG: Supporting Liz Simcock

I’m thrilled to announce that I will be supporting Liz Simcock as part of her Autumn/Winter Tour – I’ll be at Cafe9 in Sheffield on […]

GIG: Supporting Izzy Yardley: 3rd Sept

I’m pleased to say I’ll be supporting Izzy Yardley at Cafe9 in Sheffield on Sept 3rd. This will be the third time I’ve played at […]

GIG: Perenial: Harleem ArtSpace: 10th July

I’ve been asked to perform at the Pernernial Exhibition on the 10th July along with a cavalcade of musicians. Haarlem Artspace is a gallery in […]