Note: Just to say this title is meant to be a humorous and silly pun. I actually think the Amazon wizards in the main are pretty good, and in fact pretty invaluable.

Acknowledgement: I’d like to thank vExpert, Jame Kilby for reviewing this blog post prior to publication. You can follow James on twitter at https://twitter.com/jameskilbynet and he blogs at https://www.jameskilby.co.uk/

In my previous blog post I was writing about how important planning stuff upfront in any cloud environment is. Not just because this is a good practice in system design, but because so many cloud environments are resistant to the kind of arbitrary ad-hoc SysAdmin changes, that could be so easily done to fix problem in an on-premises virtualization platform. In this post I’m turning my attention to something less high-fluting and more down in the weeds.

When I was working my through the PluralSight SysOps Admin training I was following the demo’s with my Amazon AWS Console open. Mainly playing “spot the differences”. Let me make something clear – the Pluralsight training is pretty good and an excellent foundation to getting stuck in and learning more. I believe it’s going to get harder and harder to keep ALL training materials up to date and current. Cloud environments are almost naturally more “agile” (hateful word – sorry I have thing against the way our industry brutalizes my native tongue). This means it’s really hard for training materials and guides to keep track. It’s partly the reason I’ve abandoned the whole step-by-step tutorials that I did in the past. I will leave that work to the big boys – like Amazon/Microsoft/Google as they have for more resources and time. But my plan was always to go back through my notes on the course (48 pages!) to both revises what I learned; inspire new blogging content – but also go back a research those differences I’d noted. I didn’t do that there and then whilst the video rolled. It would have slowed up my pace of the training. But now I feel I have the time to check those out.

To whit. Once thing I notice is when you create a VPC in Amazon AWS using the wizard you get some new options that the Pluralsight videos didn’t dwell or mention. Incidentally, as a rule I despise wizards, however in the context of Amazon AWS I would recommend them. They often automate many tasks, and thus meet certain dependencies – and speed up the process of setup (unless you decide to go down the scripting route). I think the key with the Amazon AWS wizard is understanding exactly what is being automated, and where those settings reside. This reduces the feeling that it’s the “Wizard of Oz” pulling strings behind a curtain, with you being clueless on what he’s up to. The other thing I would recommend is that if they’re 4 different routes through a wizard – go through it four times. The best way to learn a technology is to expose your self to the reality, rather than the theory. When I was an Microsoft Certified Trainer in the ‘90s, there was an awful lot of “you can do this configuration” but then it was never gone through. One way I expanded my knowledge at the time was actually trying these “theoretical configurations” – you certainly learned that often you can do something, its often comes with major dental work, to replace all the teeth you lost putting it together…

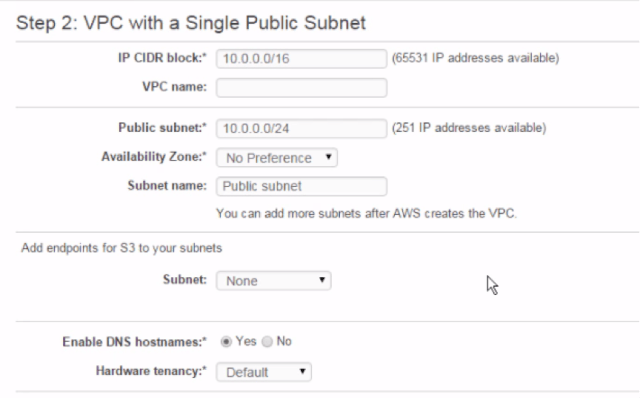

So… less pre-amble, more amble. Here’s a screengrab of the VPC wizard from PluralSight…

So the first thing you will see is with a wizard – it pre-populates the IP CIDR with 10.0.0.0/16. The VPC wizard will merrily allow you to create multiple VPC’s with the SAME IPv4 CIDR. As you can see below:

Personally, I think this is not good idea for the wizard to do this – the CIDR can NOT be easily and arbitrarily changed, and two VPC with the same CIDR cannot be “VPC Peer Linked” together. Now, you might say that will NEVER happen, but I’m not keen on configurations that limit my options, and can’t be easily changed based on a change of circumstance. Anyway, putting this gripe to one side…

So the first thing you’ll notice is that you can now request a block of IPv6 CIDR to go along with a CIDR for IPv4:

The other thing I’d caution against is these “no preference” options that often appear– basically because the location of subnets “Availability Zone” can be come significant later on. Critically, it’s the way you place or locate where an instance resides – you choose a subnet your effectively choosing an AZ. But also when it comes to solutions that both distributed load (ELB) or ensure availability for a service (such as RDS) having multiple subnets in multiple AZ with a region are a requirement. I’d go so far to say one “design” rule could be – for each and every VPC you make ensure you have at least two public subnets in two different AZ’s. This means you meet the requirements of ELB and RDS out of the box without a major re-wiring project.

Whilst I was writing this blogpost, Steve Chamber’s released a blogpost about the dangers of mismanaging IP ranges in Amazon AWS. I think if your interested its well worth a read…

Additionally, I don’t think “public subnet” is a terrifically good name – this probably is less significant to subnets – but it cascades into all things AWS. Establishing a very good naming criteria for objects, and their “Name” tag is important to me. All to often later down you go to selecting these objects in wizards, and it’s hard not to ID them, or some cases even work out where in which VPC these subnets or security groups reside. The trouble is finding a naming convention that preserve uniqueness, whilst being human readable – and being easy to see in UI’s that sometime only leave enough space for 8 characters. I guess if you only have one VPC it’s neither here nor there – but once you have multiple VPCs in multiple regions it becomes more telling. There’s a number of times I’ve had to double-double-check I’m in the right VPC in the right region for the task I’m doing. So it’s tempting to concatenate strings like

SubnetID-VPC-Region-AZ-VPC (Public01-PROD-US-OH-AZ1a)

GroupName-VPC-Region-VPC (WindowsRDP-PROD-US-OH)

Note: Subnets are specific to a AZ, whereas Security Groups are specific to a region. I’m still not sure if this is a good naming convention. Is it readable? Practical? Too Lengthy?

Anyway, I’m digressing. As is my wont to do – what was glossed over in the training for reasons of brevity were these other settings towards the bottom of the new VPC dialog such thing as “Service Endpoints” and so on. So as they either weren’t there when the training was created, or being renamed I need to work out what they were… There is no “magic” here – its called “googling”

Service Endpoints:

One was referred to as “add service endpoints” in the old UI. So this is actually quite an old feature that Amazon have just progressively made easier and easier to configure (less faffing about with ARNs and such like). I found an article about S3 (Simple Storage Service) endpoints that goes as far back to 2015. You can use the VPC to “create a logically isolated section of the AWS Cloud, with full control over a virtual network that you define”. It’s still not clear to me WHY you would want to do this, but you can. So there.

It looks like Amazon is “redacting” the association with S3, so you can have “endpoints” to any kind of future service provided. This feels a little like ‘future proofing’ the terminology so it can be used with any service they choose to subsequently offer.

Enable DNS Hostnames:

Source: http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpc-dns.html

This was an easy-peasey one to look up, and to be honest after a few weeks of using Amazon AWS and playing with Route53 – it’s easy to work out what this setting does. It’s responsible for assigning an internally resolvable FQDN to many IP addressable resources (such as instances and ELB sources). You see these FQDN on the description page of most instances – both the internal IP FQDN as well as the external IP FQDN, if an “elastic IP” has been associated with the instance:

I think this is incredibly useful as especially as these names can be referenced in your own public DNS using CNAME records. It offers some protection from the change of IP address that can happen.

One thing I did learn is you can turn off this default naming convention, and instead have a “private” DNS Domain located in Route53. In Route53 is possible to register a new domain, and Route53 will also created the zone for you – this is what I did to establish mynamedomain.net in a previous blog post. But you can also use Route53 to have a hosted zone that isn’t publically registered, but is accessible to your instances within AWS.

However, this appears to be not dynamic, and you manually have to create A-name records. That might be fine for instances that live permanently with an elastic IP that never changes – but it doesn’t really fit in with the ethos that your instances are just herds of expendable cattle, because your application is a some scale-out solution like Hadoop.

Anyway, I asked amongst my pals on the vExpert AWS Slack Channel for guidance. Pretty much no one could give a good example of turning this off… unless your application requires a “Directory Service” like Microsoft Active Directory, which integrates closely with its own DNS service. Some might say… that this sort of corporate application that requires monolithic services in this way, isn’t a good use-case for running in a Public Cloud – as the application too closely resemblances a herd of cats, than a herd of cattle. However, I don’t think it’s for me to second-guess those sorts of decisions. If that’s what the customer requires/needs then who am I to argue? I was surprised to hear this would work – as I assume AD/DNS in Windows would require a static IP, but apparently although Windows complains about the DHCP address assigned from the internal pool – it does actually work.

ClassicLink

“Up until now, legacy EC2 instances that were not running within a VPC (commonly known as EC2-Classic) had to use public IP addresses or tunneling to communicate with AWS resources in a VPC. They could not take advantage of the higher throughput and lower latency connectivity available for inter-instance communication. This model also resulted in additional bandwidth charges and has some undesirable security implications. In order to allow EC2-Classic instances to communicate with these resources, we are introducing a new feature known as ClassicLink. You can now enable this feature for any or all of your VPCs and then put your existing Classic instances in to VPC security groups.”

Conclusion:

I don’t have a particular conclusion to share in this blog post. One thing I want to do research the difference between just running with a VPC with subnets that are public and private – compared to running with a VPC that has NAT between public and private. I think there is some ‘confusion’ around the terms ‘private’ and ‘public’ and subnets, that I personally need to get more clear in my head than what I have currently. That might be subject of my next blog post…